AI Agent Development, Evaluation, and Optimization

Where a traditional LLM can analyze flight schedules or suggest hotels and their prices, an agent can receive this information from the LLM, work out the right sequence of actions, and book the flights and hotels. This capability transforms AI from a passive knowledge source to an active and resourceful assistant.

This article explores agentic system architecture, compares AI agent frameworks, provides code examples, and introduces methods for measuring the performance and accuracy of agentic workflows.

Summary of key AI agent development concepts

AI agentic architecture

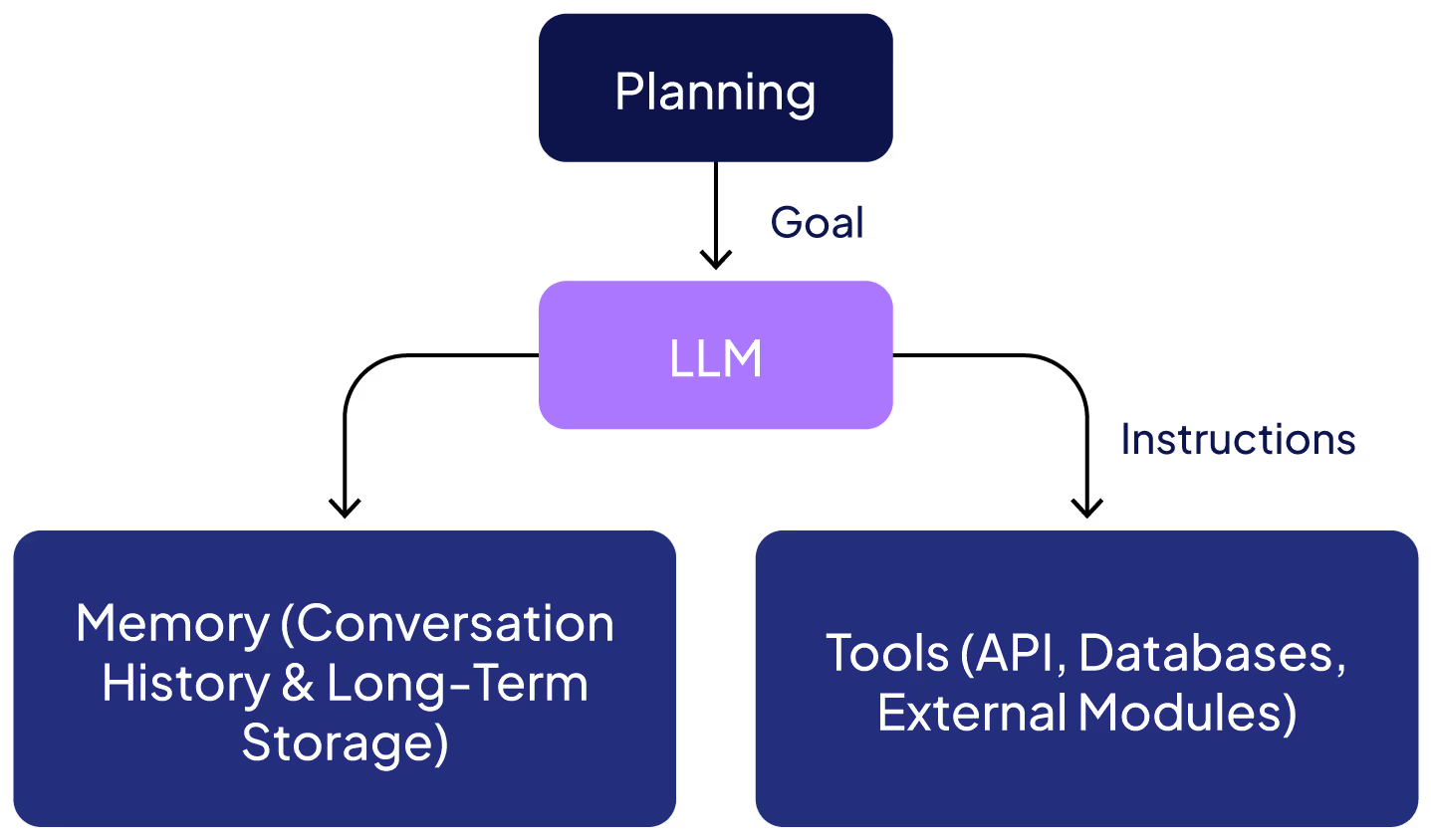

To move beyond static text generation and perform real-world tasks autonomously, an agent must integrate four major building blocks:

- Large language models (LLMs): The LLM is the primary decision-making component of the agent, helping it understand language inputs and respond with context-relevant outputs.

- Tools: The LLM can converse, but it cannot execute tasks in the real world without tools. Tools can include APIs that provide information, like weather and location, or ones that execute tasks like scheduling a calendar event or making a purchase online. Involving these tools at appropriate times is what enables the agent to translate abstract thinking into tangible action.

- Memory: Memory enables the agent to store and maintain the state of workflow steps, API calls, and user conversation history, providing additional context to optimize the LLM’s reasoning capabilities. Implementing both short-term and long-term memory allows Agents to maintain context within a current interaction (short-term) while also learning and adapting based on past experiences (long-term), leading to more personalized and relevant responses over time.

- Planning: A taskplanner or an orchestrator is responsible for dividing the user's request into several parts and understandable tasks. It defines the sequence in which these tasks should be accomplished and how to choose the right tools for each operation. The LLM then executes this plan and utilizes the memory to stay on track and the tools to accomplish the subtasks.

Here is a simple diagram that illustrates this architecture.

Pseudocode for a minimal agent might look like this:

def agentic_loop(goal, llm, planner, tools, memory):

# Step 1: Decompose goal into subtasks.

subtasks = planner.decompose(goal)

for subtask in subtasks:

# Step 2: Use the LLM to interpret the task, considering stored memory.

approach = llm.generate_approach(subtask, memory.get_context())

# Step 3: Execute subtask with the relevant tools.

result = llm.execute(approach, tools)

# Step 4: Store the result to memory

memory.update_context(subtask, approach, result)

# Step 5: Finalize answer from the context

return planner.finalize(memory.get_context())

A concrete example using the LangChain framework that implements the pseudocode above might look like the following. Note that the comments in the code snippet are placed to help readers understand the code’s structure.

import os

from langchain.llms import OpenAI

from langchain.agents import Tool, initialize_agent

from langchain.memory import ConversationBufferMemory

# ==============

# 1. Setup LLM

# ==============

# Replace "YOUR_API_KEY" with your actual OpenAI API key or configure your environment accordingly.

os.environ["OPENAI_API_KEY"] = "YOUR_API_KEY"

llm = OpenAI(temperature=0.7)

# ======================

# 2. Define Tools (APIs)

# ======================

def flight_search_api(destination: str, date: str) -> str:

"""

Mock function to simulate flight search.

In a real application, this would query airline APIs to get

flight data.

"""

# In practice, you'd return structured data (e.g., JSON), but here we return text for simplicity.

return f"Available flights to {destination} on {date}:

FlightA, FlightB"

def flight_book_api(flight_id: str) -> str:

"""

Mock function to simulate flight booking.

In a real application, this would finalize a booking

and return confirmation details.

"""

return f"Flight '{flight_id}' has been successfully booked!"

# Create LangChain tools wrapping the functions above:

flight_search_tool = Tool(

name="FlightSearch",

func=lambda query: flight_search_api(**query),

description="Tool to search for flights given a destination and date."

)

flight_book_tool = Tool(

name="FlightBooking",

func=lambda query: flight_book_api(**query),

description="Tool to book a flight using a flight ID."

)

# ===================

# 3. Memory (State)

# ===================

# This memory tracks the conversation, so the LLM can recall prior steps and context.

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

# =========================

# 4. Planning + Agent Setup

# =========================

# The agent uses the LLM to "plan" (reason about next steps) and then calls tools as needed.

tools = [flight_search_tool, flight_book_tool]

# We choose a standard "zero-shot" ReAct agent for simplicity;

# it will parse user instructions, plan, then invoke the tools accordingly.

agent = initialize_agent(

tools=tools,

llm=llm,

agent="zero-shot-react-description",

memory=memory,

verbose=True # Set to True to see the chain-of-thought planning in the console/logs.

)

# =====================

# 5. Run the Agent Flow

# =====================

# Example user query that includes searching and booking in one go:

user_input = (

"Find me a flight to San Francisco next Tuesday. "

"Then book FlightA from the results."

)

response = agent.run(user_input)

print("\nFinal Agent Response:\n", response)

Multi-agent development: Why we need multiple agents

Agents are trained to perform specific tasks well but usually disappoint when asked to multitask, so it is necessary to use multiple agents to perform different task types. For instance, when launching a new software product, one agent may be required to develop marketing copy, another to analyze server performance metrics, and a third to ensure legal compliance. Multi-agent LLMs are designed to overcome the limitation imposed by specialization by dividing the workload among several agents, each of which possesses different skills.

Specialized agents

Since each agent is prompted for a particular subdomain—such as financial analysis, legal text review, image processing, creative writing, or system integration—the full system benefits from more profound expertise in each area. Tasks are divided up and completed by separate, dedicated AIs that are better at doing them.

Task distribution

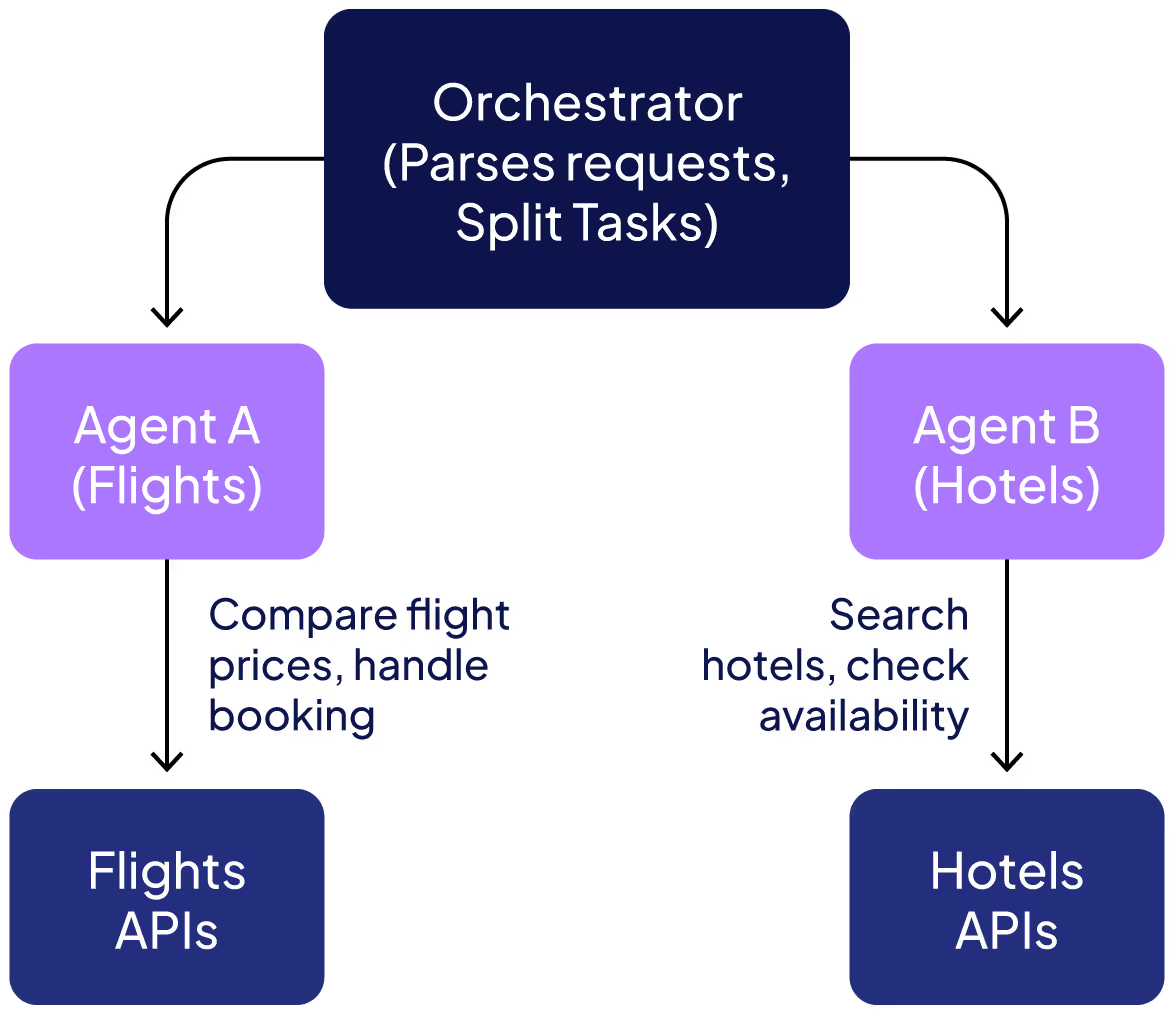

In a multi-agent architecture, a task orchestrator sits at the top of the hierarchy. It takes a user goal, breaks it down into tasks, and then assigns each task to the agent it thinks is the best fit for executing that task. The distribution of tasks also helps expedite the process by executing them in parallel. Agents normally function independently to execute their assigned tasks but may also interact with other agents and exchange context.

A simple two-agent example

Let’s review a simple example of agents planning a trip to San Francisco. In the diagram below, Agent A is responsible for comparing flight prices and booking flights, while Agent B is responsible for hotel selection and reservation. The agents make tool calls to the respective API for flights or hotels.

When processing a user's single, high-level request—in this case, “plan my business trip to San Francisco next Tuesday”—the orchestrator assigns tasks to two agents to work on that request. Agent A searches for flights and recommends the best one; after the user approves the recommendation, it makes the booking. At the same time, Agent B helps find suitable hotels near the meeting venue, checks their availability, and makes the reservation upon user approval. We will return to this example later when we evaluate the performance of these agents using Patronus AI.

The code below demonstrates how an orchestrator might coordinate the two specialized agents described above—Agent A (flights) and Agent B (hotels)—to fulfill a single high-level user request. The comments inserted in the code help explain the code blocks.

def orchestrateBusinessTrip(userRequest):

# 1. Parse the user request and extract relevant details.

requestDetails = parseRequest(userRequest)

destination = requestDetails.destination # "San Francisco"

travelDate = requestDetails.date # e.g., "Next Tuesday"

userPreferences = requestDetails.preferences # optional: budget, airline preference, etc.

# 2. Create subtasks for each agent.

flightTask = {

"type": "flight_search_and_book",

"destination": destination,

"date": travelDate,

"preferences": userPreferences

}

hotelTask = {

"type": "hotel_search_and_book",

"location": destination,

"date": travelDate,

"preferences": userPreferences

}

# 3. Assign subtasks to agents.

# The orchestrator can run these in parallel or sequentially, depending on system design.

flightOptions = AgentA.searchFlights(flightTask)

hotelOptions = AgentB.searchHotels(hotelTask)

# 4. Collect agent recommendations and ask user for confirmation.

chosenFlight = getUserApproval(flightOptions, "Select a preferred flight.")

if chosenFlight is not None:

AgentA.bookFlight(chosenFlight)

chosenHotel = getUserApproval(hotelOptions, "Select a preferred hotel.")

if chosenHotel is not None:

AgentB.bookHotel(chosenHotel)

# 5. Finalize and compile itinerary.

finalItinerary = compileItinerary(chosenFlight, chosenHotel)

# 6. Return or display final output.

return finalItinerary

# ===================

# Agent A (Flight Agent)

# ===================

def searchFlights(flightTask):

"""

Query multiple airline APIs to gather flight options.

Return a ranked list of flights based on user preferences.

"""

flightOptions = queryAirlineAPIs(

flightTask["destination"],

flightTask["date"],

flightTask["preferences"]

)

return flightOptions

def bookFlight(selectedFlight):

"""

Call the airline API to finalize booking.

Return a confirmation or booking reference.

"""

confirmation = confirmFlightBooking(selectedFlight)

return confirmation

# ===================

# Agent B (Hotel Agent)

# ===================

def searchHotels(hotelTask):

"""

Query hotel booking APIs for availability.

Return a ranked list of hotels based on user preferences.

"""

hotelOptions = queryHotelAPIs(

hotelTask["location"],

hotelTask["date"],

hotelTask["preferences"]

)

return hotelOptions

def bookHotel(selectedHotel):

"""

Call the hotel booking API to finalize the reservation.

Return a confirmation or booking reference.

"""

confirmation = confirmHotelBooking(selectedHotel)

return confirmation

Advantages of multi-agent systems

- Efficiency: Distributing tasks among specialized agents reduces the cognitive load on a single LLM, allowing the system to perform tasks more quickly and effectively.

- Flexibility: Agents can work in parallel or a sequence and can vary the tasks and their interactions with each other as needed.

Challenges with multi-agent systems

- Aligning context: The problem of context coordination across multiple agents can become complex. It is very important to align the context so that the right context is provided to the right agent.

- Consistency: If several agents produce parts of the final product or plan, the system must ensure that the resulting output is structurally and stylistically coherent.

{{banner-large-dark-2="/banners"}}

AI agent development frameworks

As the concept of agentic AI gains popularity, several frameworks and platforms have emerged to streamline development. Here is a high-level overview of popular options.

Each framework serves different needs. If you're a small startup looking for a quick route to a minimum viable agent product using open-source tools, LangChain or CrewAI would be your best bet. For large enterprises deeply invested in Azure, Microsoft Autogen could be more appealing. And if you're comfortable building a workflow from the ground up, OpenAI's APIs offer a viable foundation.

We omitted frameworks like FlowiseAI and Langflow from our discussion above. These frameworks are no-code or low-code solutions but are more limited in functionality than the solutions we discussed.

AI agent evaluation

Evaluating how well an LLM-powered agent performs is more complex than considering a single response from a traditional chatbot. Agent-based systems involve multiple decision points, and they may make numerous calls to the LLM (or even various LLMs) in a single workflow.

LLM evaluation challenges

Here are some key reasons why agent evaluations are notoriously difficult:

- Non-determinism: Agents produce different outputs for the same input. If agents are making multiple calls per workflow, these variations can compound unpredictably.

- Sequence complexity: A single agent-based workflow can involve numerous steps, such as planning, execution, and rechecking, making it difficult to determine where the error or hallucination might have been introduced.

- Time-consuming for humans: As agents become more autonomous, developers and QA teams have to bear more of the burden of ensuring that the output is not only consistent but also correct.

Key indicators of LLM and agent performance

Here are some areas that are usually used to grade an LLM agent's performance.

- Hallucinations: Does the LLM invent facts or stray off-topic? An agentic system that confidently fabricates flight options or misquotes hotel prices can lead to real-world crises like people stranded in airports.

- Context relevance: How closely does the LLM or agent stick to the provided context? If your question concerns San Francisco hotels, you don't want the agent to offer dinner options in a different city.

- Answer relevance: Even if the context is correct, the actual answer or action must align with user needs. An agent that fetches flight options but ignores your budget and preferred time window is missing the mark.

- Context sufficiency: Does the LLM gather all the necessary information for a comprehensive answer or action? Sufficiency must be tested with reference to the gold or correct answer. An agent booking a flight must verify travel dates, preferences, and other constraints.

- Answer correctness: Finally, the agent's output must be factually correct. This might involve the right data (e.g., correct flight prices) and valid calculations or logic.

Together, these factors paint a more holistic portrait of an agent's performance than a single metric or score ever could.

Introducing Patronus for AI agent evaluation

Patronus AI was designed to address the evaluation challenges discussed above; it’s like a “watch dog” for multi-agent systems. It measures how effectively and accurately your agents communicate, collaborate, and ultimately achieve the user's goal.

Evaluating a Multi-Agent Travel Booking System with Patronus AI

To explain how Patronus AI can be used effectively, let's return to our two-agent scenario: Agent A manages flight comparison and booking, and Agent B manages hotel selection and booking. In a multi-agent setup like this, ensuring smooth interactions, accurate results, and reliable decision-making is critical. This is where Patronus comes in—it monitors, validates, and improves AI-driven workflows.

Ensuring orchestrator accuracy

The orchestrator's job is to delegate tasks correctly and synthesize results. Patronus Tracing and Evaluators can ensure that this happens without errors. For example, if a user requests a flight to Paris on June 1 and a three-night hotel stay, Patronus can verify that:

- The flight booking agent was actually queried for flights.

- The hotel booking agent was asked for a stay matching the correct dates.

If the orchestrator skips a step or queries the wrong agent, Patronus Evaluators will flag the mistake. Developers can define their own Judge Evaluators that analyze conversation logs, checking that each agent is contacted in the right order—similar to a unit test verifying API calls.

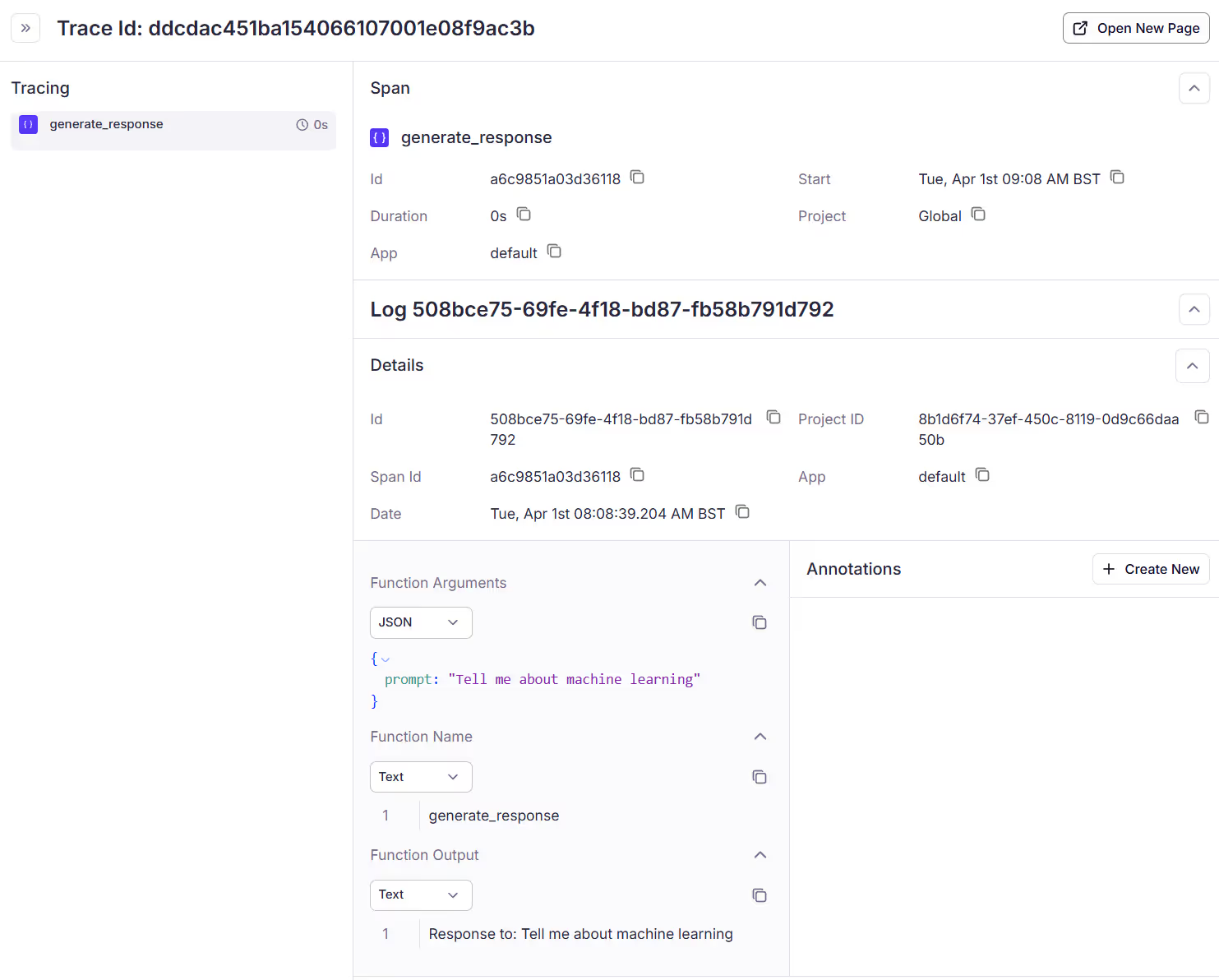

During real-time execution, Patronus Tracing logs every agent interaction with the platform so you can check whether the correct questions were asked and whether the responses sufficiently answered each request. This ensures that the orchestrator is delegating and synthesizing information efficiently.

Tracing with Patronus is simple. Patronus supports two approaches:

The first option is Function decorators. Wrapping response generation with @traced() decorator easily traces the entire function:

import patronus

from patronus import traced

patronus.init()

@traced()

def generate_response(prompt: str) -> str:

# Your LLM call or processing logic here

return f"Response to: {prompt}"

# Call the traced function

result = generate_response("Tell me about machine learning")

The second option uses Context managers to trace specific code blocks within functions:

import patronus

from patronus.tracing import start_span

patronus.init()

def complex_workflow(data):

# First phase

with start_span("Data preparation", attributes={"data_size": len(data)}):

prepared_data = preprocess(data)

# Second phase

with start_span("Model inference"):

results = run_model(prepared_data)

# Third phase

with start_span("Post-processing"):

final_results = postprocess(results)

return final_results

Validating agent responses

Booking flights and hotels isn’t just about finding options—it’s about ensuring that those options match the user’s request. Patronus Evaluators can detect context relevance and correctness issues. As an example, Patronus Evaluators will flag the mismatch if a user requests a flight on June 1, but the flight booking agent returns a flight on June 2. Similarly, if the hotel agent suggests a stay that doesn’t align with the flight’s arrival or departure dates, it identifies the inconsistency.

Patronus’s Exact Match Evaluator can compare expected outcomes to real outputs. For example, in a test scenario where the cheapest flight is known to be $500, Patronus can validate whether the agent retrieves the correct price.

Beyond accuracy, you can use Patronus Evaluators to check for response relevance. If the hotel agent starts providing tourist facts about Paris instead of a booking, Patronus’ Answer Relevance Evaluator detects the off-topic response, keeping interactions focused and efficient.

Additionally, Patronus evaluators can also check consistency across responses. If the flight arrives on June 2 but the hotel booking is for June 1–4, it flags the logical inconsistency, prompting a correction in the orchestrator’s logic.

Reducing AI hallucinations

LLM-based agents sometimes generate hallucinated results, like flights or hotels that don’t exist. You can mitigate this risk using the Patronus Lynx hallucination detection evaluator, which cross-references retrieved data with the agent’s answers.

For instance, if the flight booking agent is given a list of available flights but generates an option not on the list, Lynx detects the discrepancy and flags the response as unreliable. This context fidelity check ensures that only verified, real-world information is passed to the user. If a hallucination is detected, the orchestrator can take corrective action, such as discarding the response or prompting the agent to re-query the database.

Patronus acts as a safety net by catching these hallucinations before they reach users, preventing misinformation from creeping into AI-generated itineraries.

Optimizing decision-making

The system also tracks performance indicators like efficiency. If the orchestrator needs multiple back-and-forth queries to agents due to incomplete responses, Patronus Tracing will document the potential performance issue. A well-optimized workflow would aim for a seamless, one-shot coordination process.

Continuous improvement through iteration

Developers can use Patronus to run simulations of travel booking requests and analyze outcomes. By tracking success metrics like booking completion rate or a simulated user satisfaction score, Patronus helps teams identify weak points in the system.

For example, Patronus can ingest user feedback to provide actionable insights, such as if the system works well for simple trips but struggles with multi-city bookings. Developers can then adjust agent prompts, add clarification steps, or refine how the orchestrator synthesizes results. Over time, this iterative process builds a more robust, intelligent travel assistant—one that minimizes errors, eliminates hallucinations, and makes decisions that truly meet user needs.

Another useful tool is Patronus Experiments, which helps you move beyond vibe checks and make concrete, data-driven improvements to your GenAI system. Running an experiment is easy: select an evaluation dataset, test it against your system, and use evaluators to measure performance. Patronus provides ready-to-use datasets and evaluators, but you can always bring your own.

By integrating Patronus, AI-driven travel booking doesn’t just become automated—it becomes accurate, reliable, and consistently aligned with your expectations.

{{banner-dark-small-1="/banners"}}

Last thoughts

The rise of agentic AI represents a transformative technological shift. It enables autonomous systems to reason and perform complex tasks with minimal human intervention, placing its importance at the same level as past technological revolutions like personal computing and the Internet.

While this advancement offers immense potential to simplify and enhance our lives, it also raises significant challenges, including ethical concerns and data security. Fortunately, evaluation frameworks like Patronus can ensure trustworthiness, reliability, accuracy, performance, and alignment with human values.

%201.avif)