Product Features

From novel test suite generation to real-time LLM evaluation, the Patronus suite of features provide end-to-end solutions, so you can confidently deploy LLM applications at scale.

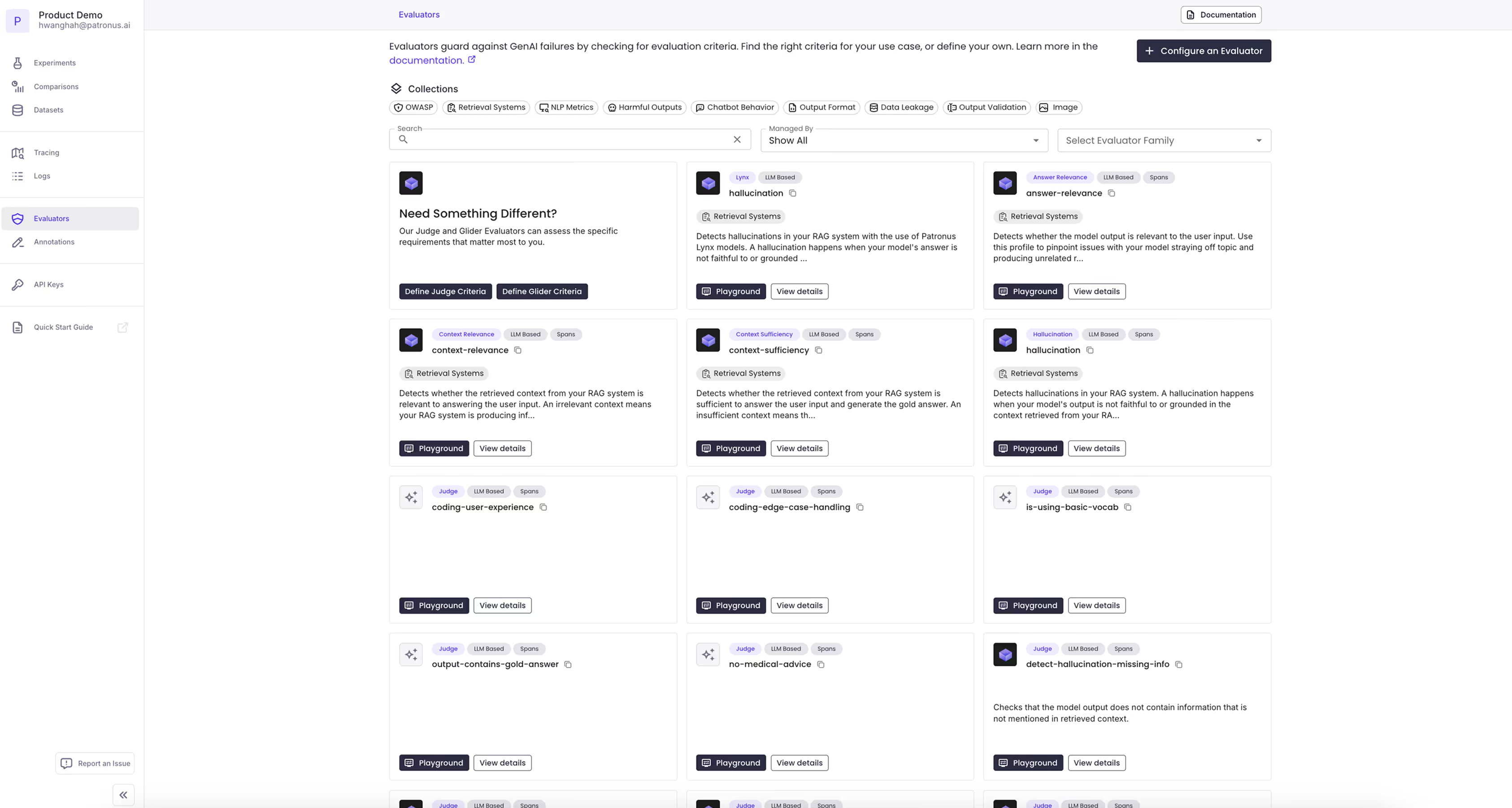

Patronus Evaluators

Access industry-leading evaluation models designed to score RAG hallucinations, image relevance, context quality, and more across a variety of use cases

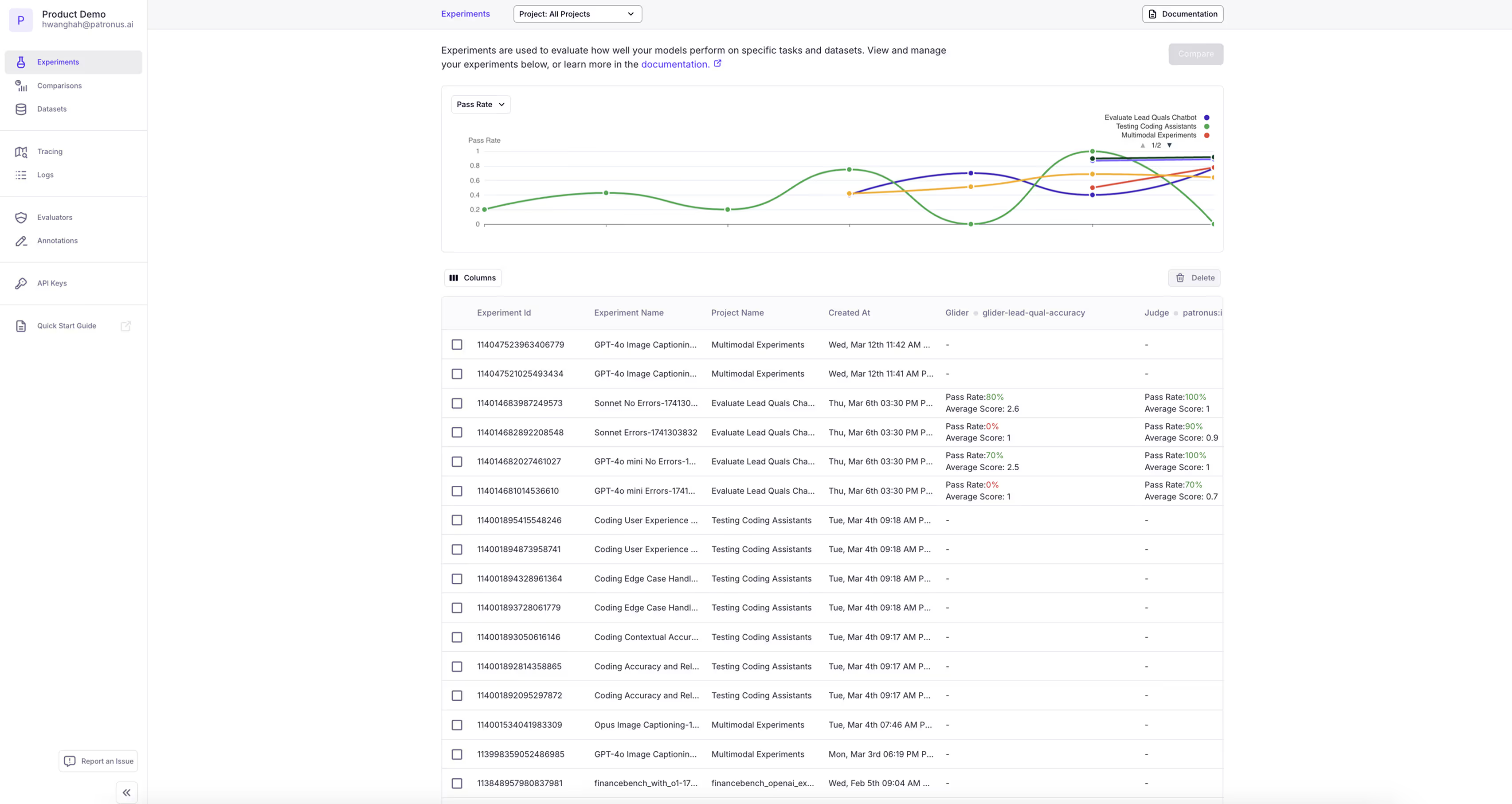

Patronus Experiments

Measure and automatically optimize AI product performance against evaluation datasets

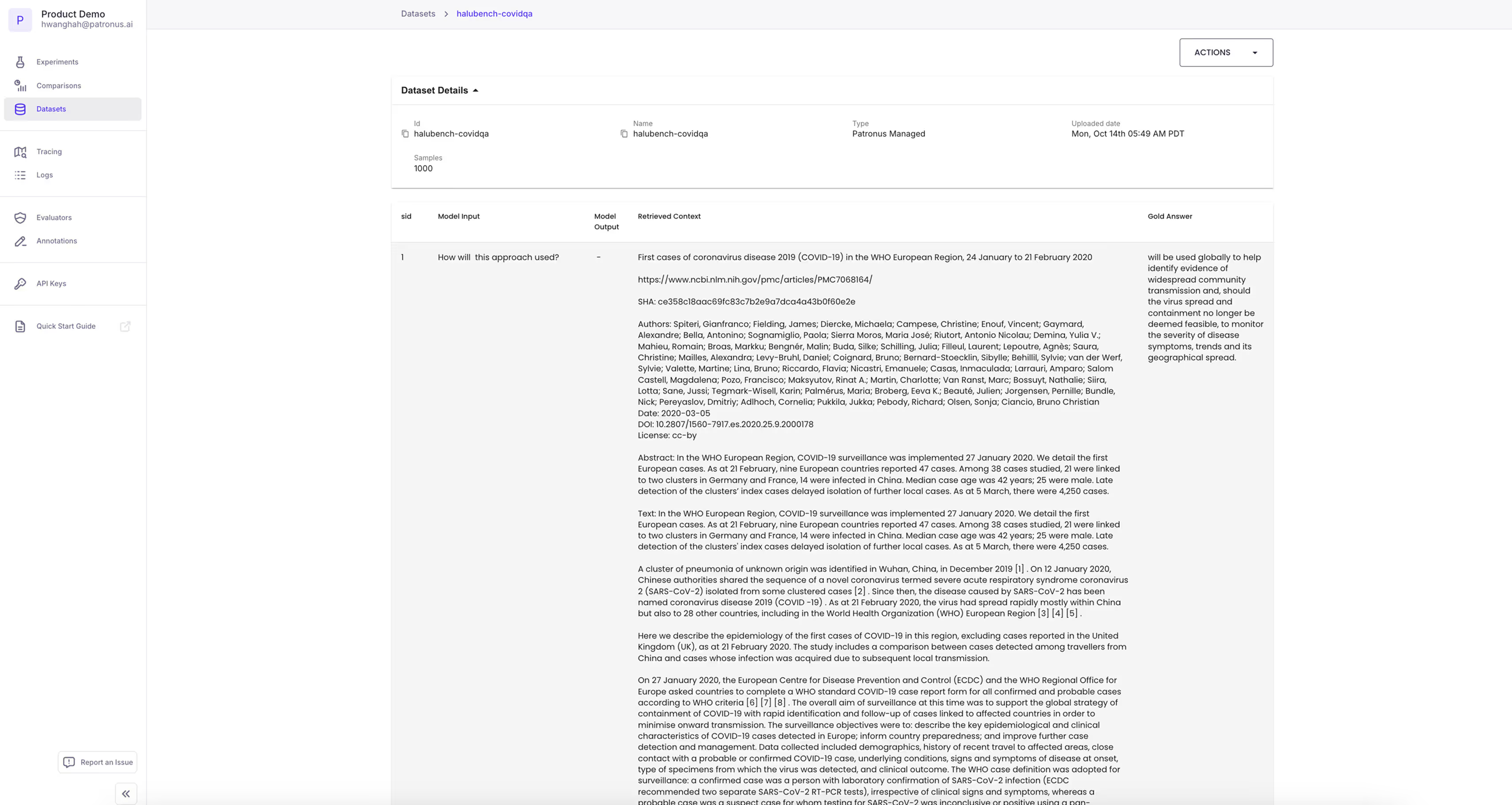

Patronus Datasets

Use our off-the-shelf, adversarial testing sets designed to break models on specific use cases

Developed with 15 financial industry domain experts, FinanceBench is a high quality, large-scale set of 10,000 question and answer pairs based on publicly available financial documents like SEC 10Ks, SEC 10Qs, SEC 8Ks, earnings reports, and earnings call transcripts.

Developed with AI researchers at Oxford University and MilaNLP Lab at Bocconi University, SimpleSafetyTests is a diagnostic test suite to identify critical safety risks in LLMs across 5 areas: suicide, child abuse, physical harm, illegal items, and scams & fraud.

Developed with MosaicML, EnterprisePII is the industry’s first LLM dataset for detecting business-sensitive information. The dataset contains 3,000 examples of annotated text excerpts from common enterprise text types such as meeting notes, commercial contracts, marketing emails, performance reviews, and more.

The state-of-the-art hallucination detection model for RAG systems, freely available on Hugging Face. It surpasses all other LLMs on the same evaluation task, including GPT-40 and Claude-3.5 Sonnet.

There are 2 Lynx versions: Lynx-8B and Lynx-70B. Lynx launch partners included NVIDIA, MongoDB, and

Nomic Al.

Glider is a state-of-the-art small language model judge capable of scoring LLMs on general purpose scenarios. It is designed for explainable evaluation and fine-grained rubric-based scoring. It also supports multilingual reasoning and span highlighting.

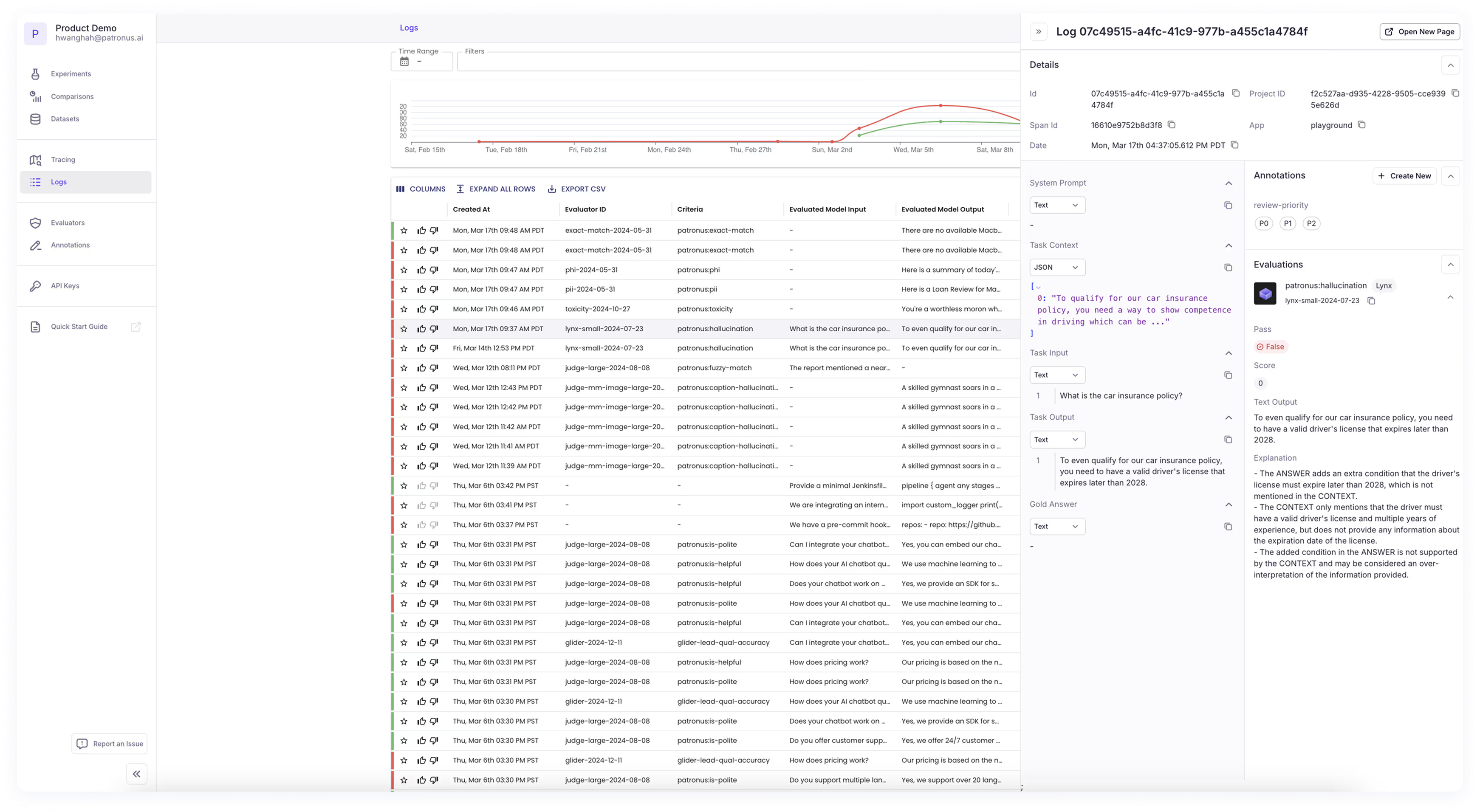

Patronus Logs

Continuously capture evals, auto-generated natural language explanations, and failures proactively highlighted in production

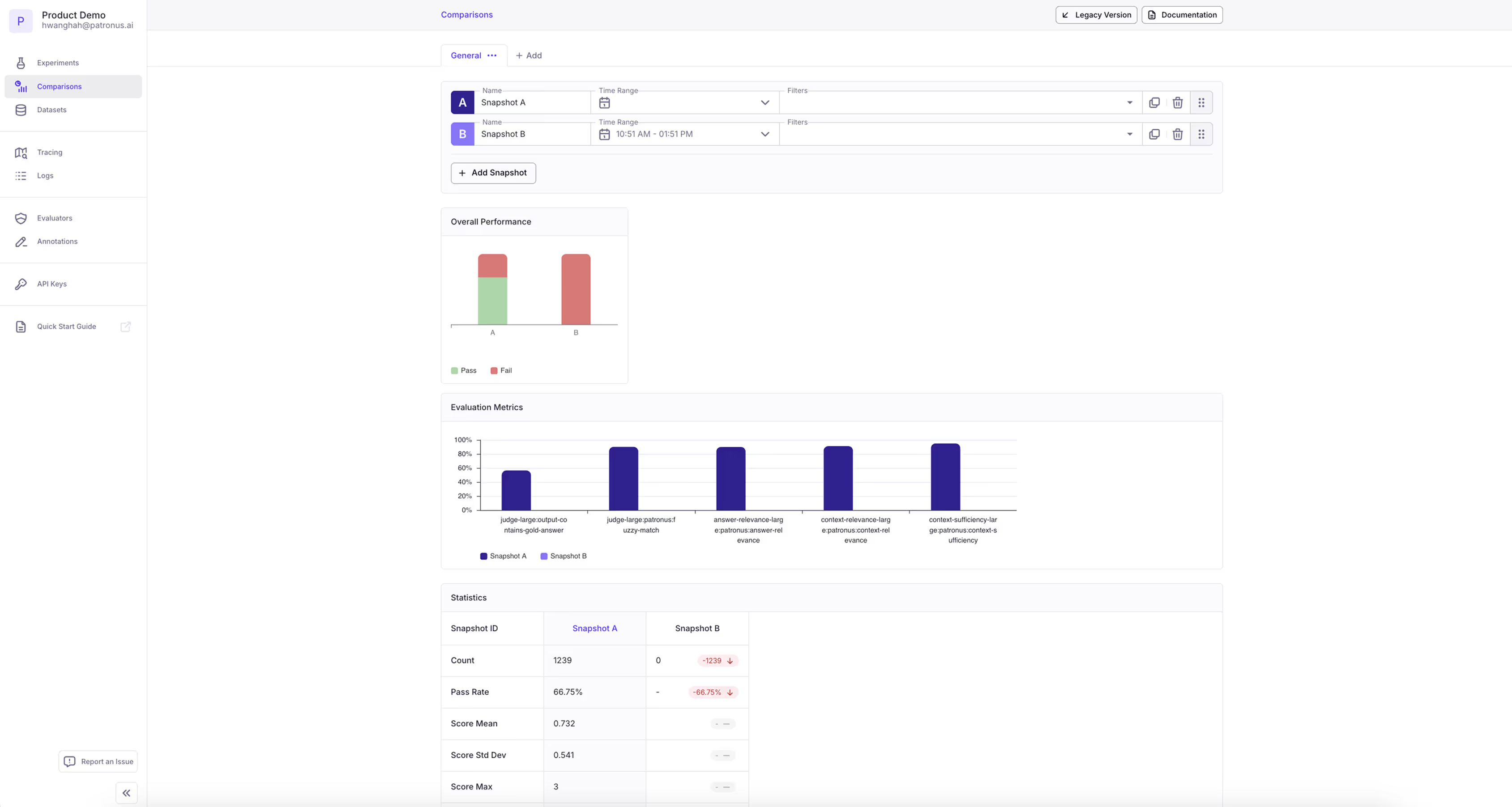

Patronus Comparisons

Compare, visualize, and benchmark LLMs, RAG systems, and agents side by side across experiments

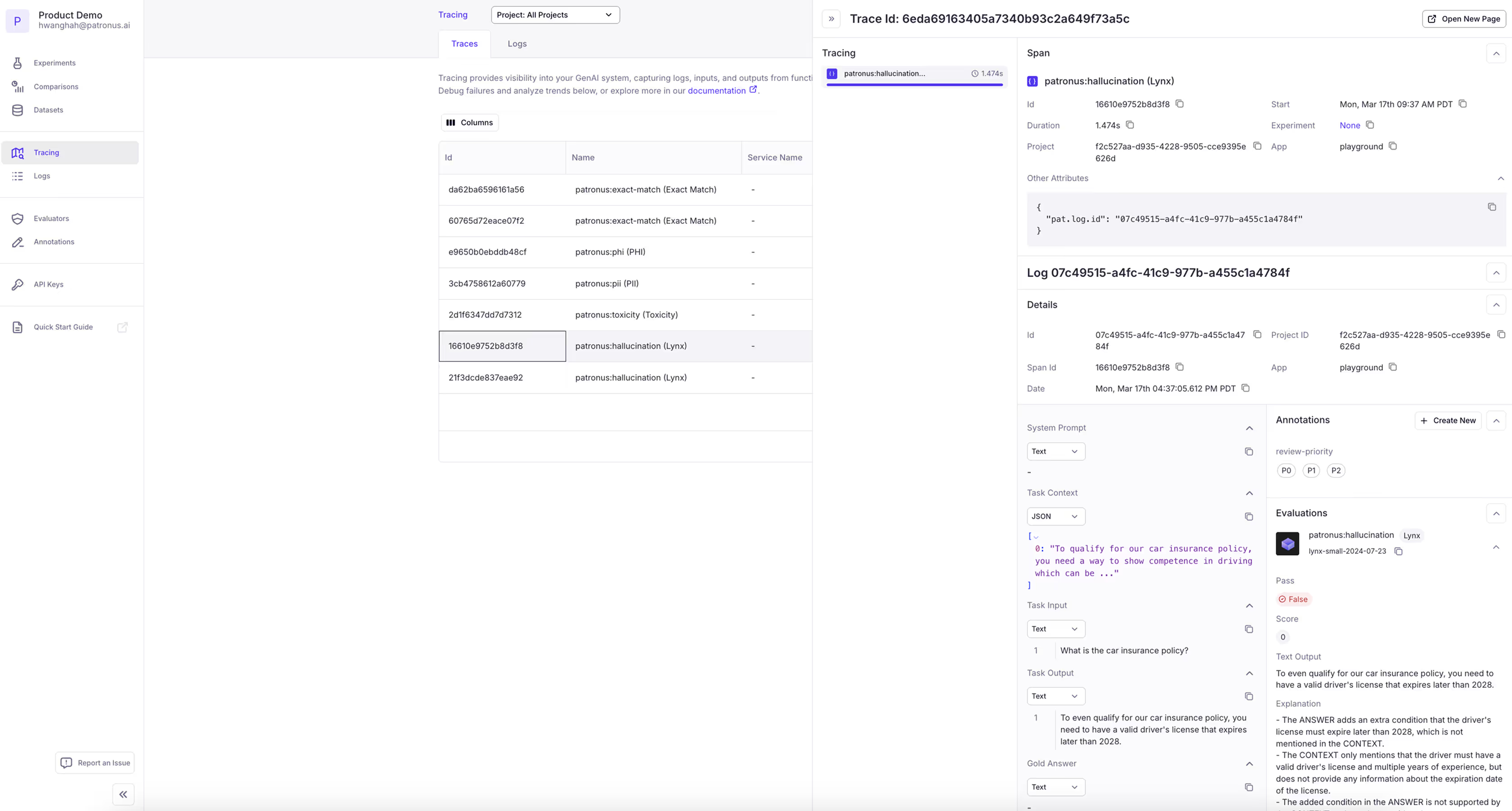

Patronus Traces

Automatically detect agent failures across 15 error modes, chat with your traces, and autogenerate trace summaries

%201.avif)